This paper was actually accidental: I was enrolled in a graduate class and had to come up with a project for the quarter. I had a very out-there idea of taking what we had learned about VLIW machines and applying it to configurable computing architectures.

A basic tenant of configurable computing, and the one that got me hooked as a senior in undergraduate engineering, as the idea that the lines between hardware and software could be blurred. Software had always been tailored for the hardware that it ran on, whether it was done that way by hand or if left up to the compiler. Well, what if you could design hardware that better fit the needs of a particular application, automatically? My idea was to use the well-known tricks of scheduling for VLIW machines and use those as a set of “hints” for the what the ideal hardware could look like.

The class professor wasn’t too hot on this idea, but let me do it anyway ‘cuz I’m tenacious. So for the next few weeks I poked around an open-source compiler and messed with making a mythical hardware design out of the compiler’s scheduler and ended up with some interesting results.

For example, most DSP’s have a single instruction multiply-and-add. This is because the most common thing they do is add and multiply (you can find a bunch of these in the my previous paper). Well it turns out that you get only modest savings from doing that. My research showed that for most implementations, making faster individual multiply and add units performed better than making a monolithic one.

The results were interesting enough that the prof convinced me to submit my work to a handful of conferences. Ha! This was literally a stretched out homework assignment and he expected it to be accepted at a research conference? Well, it did, and to one of the most prestigious ones — ICCAD. Here’s a link to my paper, the abstract and embedded copy can be found below.

Abstract

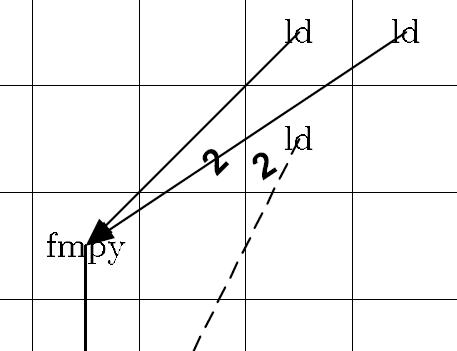

Many previous attempts at ASIP synthesis have employed template matching techniques to target function units to application code, or directly design new units to extract maximum performance. This paper presents an entirely new approach to specializing hardware for application specific needs. In our framework of a parametrized VLIW processor, we use a post-modulo scheduling analysis to reduce the allocated hardware resources while increasing the code’s performance. Initial results indicate significant savings in area, as well as optimizations to increase FIR filter code performance 200% to 300%.